What happens when a powerful tool like artificial intelligence operates without clear boundaries in a world reliant on data-driven decisions, and how does this impact fairness and trust? Picture a major corporation using an AI system for hiring, only to discover that the algorithm silently rejects qualified candidates based on undetectable biases. This scenario, far from hypothetical, underscores a pressing reality: as AI reshapes industries from healthcare to human resources, the lack of structured oversight threatens trust and fairness. This critical gap between innovation and accountability sets the stage for a deeper exploration into how governance can transform ambition into tangible results.

Why AI Governance Captures Urgent Focus

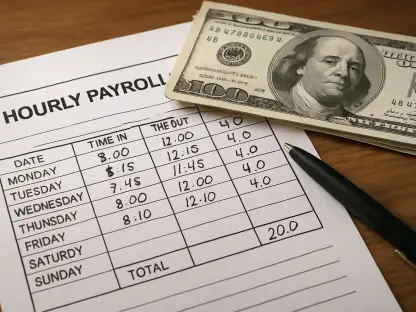

The rapid integration of AI into everyday operations has sparked both awe and concern. In sectors like talent management, algorithms now screen resumes and predict employee success, yet unexplained decisions can erode confidence among stakeholders. A recent study revealed that over 60% of HR professionals worry about bias in AI tools, highlighting a tangible fear of unchecked systems. The question looms large: how can accountability be ensured when technology often outpaces regulation?

Beyond individual businesses, the societal implications are profound. AI influences everything from public policy to personal privacy, often handling sensitive data with little transparency. Without robust frameworks, the risk of misuse or unintended harm grows, making governance not just a technical necessity but a societal imperative. This urgency drives the need to address these challenges head-on, before trust is irreparably damaged.

The High Stakes of Governing AI Today

The significance of AI governance extends far beyond boardroom discussions, touching on real-world impacts across regulated industries. In healthcare, for instance, AI systems manage patient data, where a single error or breach can have life-altering consequences. Similarly, in HR, automated tools shape career trajectories, amplifying the need for ethical standards to protect individual rights while fostering innovation.

A notable step in this direction is the European Union’s voluntary AI Code of Practice, which signals a global commitment to tackling ethical dilemmas. However, while this framework marks progress, it also reveals a stark divide between intent and implementation. Many organizations still grapple with translating high-level policies into day-to-day practices, exposing a gap that could hinder both business growth and public safety.

The stakes are twofold: societal trust and economic vitality. Governance must strike a delicate balance, ensuring that AI systems safeguard fundamental rights without stifling the potential for breakthroughs. This dual challenge underscores why governance is not a luxury but a cornerstone for sustainable advancement in an AI-driven era.

Unpacking the Core Hurdles in AI Governance

Navigating the path from policy to practice reveals several critical obstacles. On a societal level, AI’s reach extends into shaping cultural norms and individual opportunities, particularly in fields like HR where biased algorithms can perpetuate inequality. The ethical responsibility to mitigate such risks demands rigorous scrutiny of how these tools are designed and deployed, ensuring they serve rather than harm communities.

Operationally, businesses face the daunting task of embedding transparency into complex AI systems. This becomes especially challenging in hybrid IT and cloud environments, where data flows across multiple platforms. Achieving auditability and interoperability is essential, yet many organizations lack the maturity to integrate these principles, leaving systems vulnerable to oversight failures and compliance issues.

Then there’s the tension between regulation and innovation. Industry giants like Siemens and SAP have voiced concerns that overly stringent rules could hamper economic growth, a sentiment backed by data showing AI adoption rates climbing to 35% among enterprises in 2025. Striking a balance requires learning from case studies, such as companies that successfully scaled AI while adhering to ethical guidelines, proving that governance can coexist with progress.

Industry Voices on the Governance Frontier

Expert perspectives shed light on the complexities of this landscape. Bill Conner, President & CEO of Jitterbit, emphasizes the importance of building accountability directly into AI systems. “Transparency isn’t an add-on; it’s the foundation of trust in technology,” Conner asserts, advocating for tools that provide visibility at every stage of deployment. His insight points to a growing consensus that governance must be proactive rather than reactive.

Contrasting views emerge from major players like Siemens and SAP, who caution against overregulation. Their concern centers on preserving digital models that fuel economic expansion, warning that heavy-handed policies could deter investment. This debate illustrates a broader struggle: how to protect rights without curbing the competitive edge that AI offers to businesses globally.

To ground these discussions, consider a business leader navigating compliance in a multinational firm. Tasked with implementing AI for talent acquisition, they face conflicting regional standards and internal pushback on transparency. Such real-world dilemmas highlight the urgency of aligning governance with practical needs, making abstract policies feel immediate and actionable for those on the front lines.

Practical Steps for Embedding AI Governance

Turning theory into action requires concrete strategies tailored to organizational realities. One key approach is designing AI systems with transparency from the outset, using platforms like Jitterbit Harmony for seamless integration and compliance tracking. Such tools enable businesses to monitor workflows across diverse systems, ensuring ethical standards are met without sacrificing efficiency.

Another vital tactic is adopting “white box” principles, where AI processes remain explainable to users. This builds trust among employees and clients, particularly in sensitive areas like talent management. Coupled with human-in-the-loop oversight for high-stakes decisions, this method ensures that technology augments rather than replaces human judgment, maintaining a critical balance.

Finally, robust data architectures are essential for lifecycle management and real-time governance. By prioritizing structures that support continuous monitoring, organizations can adapt to evolving regulations and use cases. These steps, aimed at business and IT leaders, position governance as an enabler of scalable AI adoption, transforming it from a burden into a strategic asset for responsible growth.

Reflecting on this journey, the discourse around AI governance has evolved significantly as stakeholders grapple with its dual nature as both opportunity and risk. Industry leaders and policymakers alike wrestle with frameworks that aim to protect without paralyzing progress. The path forward crystallizes around actionable measures—transparency in design, explainable systems, and adaptive oversight—offering a blueprint for organizations to navigate this terrain. As technology continues to advance, the commitment to embedding accountability stands as a guiding principle, ensuring that AI’s potential is harnessed with integrity for generations to come.